This week was my first undertaking of preparing a vCenter environment for agent-less AV using Trend Micro Deep Security 9.6 SP1 and NSX Manager 6.2.4. So far, it’s been a great learning experience (to remain positive about it), so I wanted to share.

Initially (about 4 months ago), vShield was deployed, and since day 1, we had problems. Since I’ve never worked with Trend Micro Deep Security, I relied on the team that managed it to tell me what their requirements were. Sometimes, this is the quickest way to get things done, but what I realized is that even if it works in one environment, it always helps to validate compatibility across the board. If I had done that, I would have probably saved myself a lot of headache…

So in anticipation of a “next time”, here are my notes from the installation and configuration. Hopefully, this information is found useful to others, as some of it isn’t even covered in the Deep Security Installation Guide.

Please not, this is not a how-to. It’s more of a reference for the things we’ve discovered through this process that may not all be documented in one place. With that said, here’s my attempt at documenting what I’ve learned in one place.

The process (after trial and error since the installation guide isn’t very detailed) that seems to work best is as follows:

- Validate product compatibility versions (vCenter, ESXi, Trend Micro Deep Security, NSX Manager)

- Deploy and configure NSX Manager, then register with vCenter and the Lookup Service.

- Deploy Guest Introspection Services to the cluster(s) requiring protection.

- Register the vCenter and NSX Manager from the Trend Micro Security Manager.

- Deploy the Trend Micro Deep Security Service from vSphere Networking and Security in the Web Client.

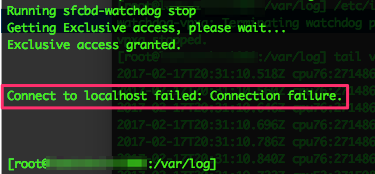

When we first set out to deploy this in our datacenter, it was months ago. We initially started with vShield Manager, which is what was relayed to us from the team that manages Trend. We met with issues deploying properly and “things” missing from the vSphere Web Client when documentation said otherwise. We had support tickets open with both VMware and Trend Micro for at least a few months. At one point, due to the errors we were getting, Trend and VMware both escalated the issue to their engineering/development teams. At the end of the day, we (the customers) eventually figured out what was causing the problem… DNS lookups.

The Trend Micro Deep Security installation guide does not cover this as a hard requirement. Although the product will allow you to input and save IP addresses instead of FQDNs, it just doesn’t work, so use DNS whenever possible!

vShield

First of all, I wouldn’t look at vShield anymore unless working in an older environment after this. In fact, I may just respond with:

If it’s EOL, is no longer supported, AND incompatible; I won’t even try. “Road’s closed pizza boy! Find another way home!”

You don’t gain anything from deploying EOL software. Very importantly, you don’t get any future security updates when vulnerabilities are discovered and you won’t get any help if you call support about it.

In case you’re reading this and did the same thing I did, here are some things we noticed during this vShield experience:

- Endpoint would not stay installed on some the ESXi hosts, while it did on others.

- There is additional work for this configuration if you’re using AutoDeploy and stateless hosts. (see VMware KB: 2036701)

- When deploying the TM DSVAs to hosts where Endpoint did stay on, installation fails as soon as the appliance is attempted to be deployed.

- This is where we discovered using FQDN instead of IP address is preferred.

- After successfully deploying the DSVAs, we still had problems with virtual appliances and endpoint staying registered, so it never actually worked.

In the back of my mind since I didn’t do this up-front, I started questioning compatibility. Sure enough, vShield is EOL, and is not compatible with our vCenter and host versions.

VMware NSX Manager 6.2.4

With a little more research, I found that NSX with Guest Introspection has replaced vShield for endpoint/GI services, and as long as that’s all you’re using NSX for, the license is free. With NSX 6.1 and later, there is also no need to “prepare stateless hosts” (see VMware KB: 2120649).

Before simply deploying and configuring, I checked all the compatibility matrixes involved and validated that our versions are supported and compatible. Be sure to check the resource links below, as there is some important information especially with compatibility:

- vCenter: v 6.0 build 5318203

- ESXi: v 6.0 build 5224934

- NSX: v 6.2.4 build 4292526

- Trend Micro Deep Security: v 9.6 SP1

Note: NSX 6.3.2 can be deployed, but you will need at least TMDS 9.6 SP1 Update3 – which is why I went with 6.2.4, and will upgrade once TMDS is upgraded to support NSX 6.3.2.)

Resources:

- Correlating build numbers and versions of VMware products (1014508)

- VMware Product Interoperability Matrices

- Trend Micro Compatibility Matrix

- NSX Ports and Protocols

- Trend Micro Ports

- Compatibility between NSX 6.2.3 and 6.2.4 with Deep Security

What I’ve Learned

Here are some tips to ensure a smooth deployment for NSX Manager 6.2.4 and Trend Micro Deep Security 9.6 SP1.

- Ensure your NTP servers are correct and reachable.

- Use IP Pools if at all possible when deploying guest introspection services from NSX Manager. (makes deployment easier and quicker)

- Set up a datastore that will house ONLY NSX related appliances. (makes deployment easier and quicker)

- When you first set up NSX Manager, be sure to add your user account or domain group with admin access to it for management, otherwise, you won’t see it in the vSphere Web Client unless you’re logged in with the administrator@vsphere.local account.

- Validate that there are DNS A and PTR records for the Trend Micro Security Manager, vCenter, and NSX Manager, otherwise anything you do in Deep Security to register your environment will fail.

- Pay close attention to the known issues and workarounds in the “Compatibility Between NSX 6.2.3 and 6.2.4 with Deep Security” reference above, because you will see the error/failure they refer to.

- If deploying in separate datacenters or across firewalls, be sure to allow all the necessary ports.

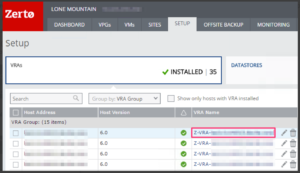

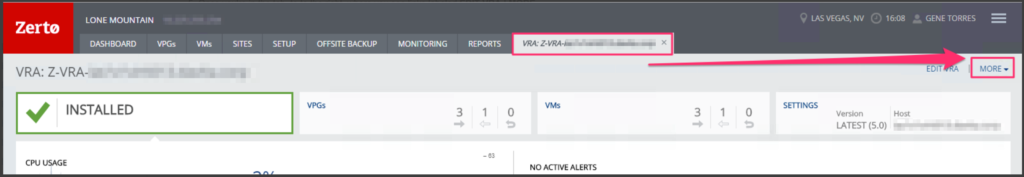

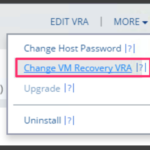

- Unlike vShield Manager deploying Endpoint, NSX Manager deploying Guest Introspection is done at the cluster level. When using NSX, you can’t deploy GI to only one host, you can only select a cluster to deploy to.

If you’ve found this useful in your deployment, please comment and share! I’d like to hear from others who have experienced the same!